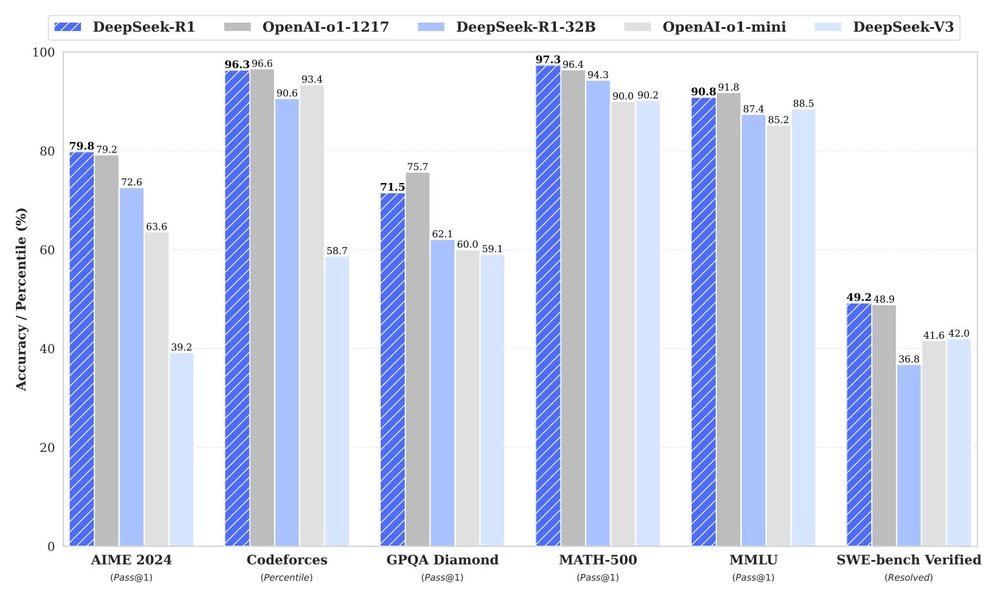

The launch of Deepseek R1 has made waves in the AI industry, drawing global attention. This Chinese-developed model claims to surpass OpenAI’s flagship model, ChatGPT-01, in terms of performance.

What sets Deepseek R1 apart is not just its intelligence but also the fact that it was developed under significant technological constraints, particularly the trade barriers imposed by the United States, which have made it difficult for China to access crucial high-level processing chips. Despite these challenges, Deepseek R1 has been developed efficiently at a lower cost. This could signal that China is reducing its reliance on the West and stepping into its own AI era, leading many to consider it a game-changer in the AI industry.

However, to provide a more balanced perspective, Techsauce has reached out to Dr. Pat Phatphanthun, a technology expert at MIT Media Lab, also known as Dr. PP, a researcher in Human-AI Interaction. Dr. Phatphanthun believes that while Deepseek marks an important development in AI, it may not represent the groundbreaking leap many perceive it to be.

Dr. Phatphanthun emphasizes that the definition of a breakthrough depends on individual perspectives and that this is his opinion as a researcher in Human-AI Interaction, considering the technological dimension and the broader impact on the AI industry.

Why Deepseek May Not Be a Technological Leap

Dr. Phatphanthun posted on his personal Facebook (Pat Pataranutaporn) with the title “My take on DeepSeek: Innovation?” He began by pointing out that Deepseek may not be the transformative innovation that many are excited about. The hype surrounding the news might not stem from technological advancement itself, but rather from the geopolitical context involving the U.S. and the feelings some people may have towards Sam Altman, the CEO of OpenAI. These factors may have contributed to the extra attention Deepseek has garnered.

Another point Dr. Phatphanthun raised is that the news of Nvidia’s stock dropping 17%, resulting in a nearly $600 billion market value loss, does not necessarily mean Deepseek is a true innovation. The stock market is often driven more by emotion and other factors than by technological achievements alone.

He also gave the example of Elon Musk, whose tweets can cause the stock prices of certain companies to skyrocket or plummet, even when the content of his tweets isn’t directly related to technology or new developments. This highlights that market sentiment and financial trends are not reliable indicators of true innovation.

4 Reasons Why Deepseek May Not Be a Game-Changer

Small Language Models Are Not New Dr. Phatphanthun pointed out that the concept of creating smaller AI models that can run on general-purpose devices, such as personal computers or smartphones, is not new. This research and development has been ongoing since at least 2020, or possibly earlier. Techniques like “Pruning,” which removes unnecessary parts of the model to make it smaller while still maintaining good results, and “Distillation,” where a smaller model learns from a larger one, have been widely used by major AI companies such as OpenAI, Google, and Meta for some time. Therefore, the act of making models smaller is a standard practice in the AI industry and doesn’t qualify as a “breakthrough innovation.”

Base Models Are Not Built from Scratch Another issue with Deepseek’s base model is that it wasn’t developed entirely from scratch. Instead, the company improved upon existing models, which kept the development cost lower than starting from scratch. According to Deepseek’s research, two main approaches were used:

- Reinforcement Learning (RL): This technique allows the AI to learn from trial and error by rewarding correct answers and penalizing incorrect ones, thus improving the model over time.

- Distillation: This technique enables a smaller model to learn from a larger model, using open-source base models from Meta and Alibaba.

Dr. Phatphanthun explained that this means Deepseek didn’t train a new model from the ground up (train from scratch), but rather improved an existing model (post-training). This is why Deepseek uses fewer resources, such as GPUs, and is more cost-effective than many might assume. However, when comparing Deepseek to OpenAI, this isn’t a fair comparison, as OpenAI creates models from scratch, which is far more resource-intensive.

Chain-of-Thought Isn’t Real Reasoning “Chain-of-Thought” (CoT) is a technique that helps AI think through steps in order to give more reasoned answers. However, Dr. Phatphanthun argued that this still isn’t real reasoning, as it’s essentially just mimicking how humans reason rather than actually understanding the meaning behind the answers.

This approach is known as probabilistic reasoning, where the AI guesses an answer based on the likelihood of certain outcomes learned from data. This differs from symbolic reasoning, which involves logical systems and formal rules, such as mathematics and logic, that allow for true, structured reasoning. Chain-of-Thought prompting itself isn’t new, having first appeared in research from Google Brain in 2022, showing that even without fine-tuning, a well-designed prompt could lead to better reasoning.

To achieve true reasoning capabilities, Dr. Phatphanthun suggested exploring NeuroSymbolic methods, which could help AI understand and reason in a more structured and logical manner.

Incomplete Comparisons Dr. Phatphanthun also mentioned that Deepseek’s tests involve comparing their model to others to showcase its superior performance. However, these comparisons are incomplete because they don’t account for simpler methods like using Chain-of-Thought without reinforcement learning (RL).

There’s a lingering question about whether Deepseek’s improvements come from RL or simply because Chain-of-Thought is a technique that naturally improves the model’s performance. For example, using LLAMA with Chain-of-Thought might show that Deepseek’s improvements are more due to CoT than RL. Interestingly, even LLAMA with only 8 billion parameters performs well on benchmarks like MMLU, suggesting that good performance can be achieved without RL.

Dr. Phatphanthun’s Perspective on Deepseek and the Future of AI

Dr. Phatphanthun believes the excitement surrounding Deepseek might cause people to misunderstand its true impact. He compares it to attaching a race car to a cart and claiming the cart can now go as fast as the race car, implying that Deepseek may not represent a true technological breakthrough, but rather a case of using certain techniques or contexts to make it appear more advanced than it is.

That being said, Dr. Phatphanthun doesn’t dismiss small models entirely. In fact, he sees the reduction in environmental impact due to smaller models as something worth praising. This is an important consideration as researchers, such as Kate Crawford, have pointed out in her book the significant energy savings associated with smaller AI models.

As for Deepseek, Dr. Phatphanthun acknowledged that innovations like GRPO (Generalized Reward Prediction Optimization) and multi-token prediction are valuable, but they are more of a logical next step rather than a revolution in the field.

He expressed greater excitement about Mechanistic Interpretability work done by Anthropic, which aims to make the reasoning process of AI models more understandable by humans, providing a clearer view into how AI models work.

Dr. Phatphanthun also noted that global AI remains largely influenced by frameworks originally established in the United States. Even if China develops new models, they are often incremental improvements on existing U.S.-based approaches. For China to show true innovation, Dr. Phatphanthun believes they must introduce a completely new AI category and dominate it, rather than merely refining existing ideas.